Fluid Prompts: A Technique for Stable Diffusion Model Analysis

Learn how to use fluid prompts to analyze AI image generation models, detect biases, and improve your prompting skills. A practical guide with real-world examples using popular models.

Ever wonder what your favorite Stable Diffusion model is really capable of? Or maybe you’ve noticed it keeps generating certain types of images even when you don’t ask for them? That’s where fluid prompts come in—a simple yet powerful technique I’ve been using to better understand AI models.

TL;DR

Fluid prompts are minimal, open-ended prompts that allow AI models to express their default tendencies. Think of them as a blank canvas with just a few constraints. I discovered that prompts like detailed PNG or a captivating bold фото. are fantastic for:

- Quickly revealing model biases (especially useful before diving into serious projects!)

- Understanding what your model is really good at

- Spotting potential technical issues like overtraining

Want to try it yourself? Jump straight to the practical examples, where we analyze DreamShaper and CyberRealistic models. But I recommend reading the concept explanation first—it’s worth it, I promise!

What is a fluid prompt?

The term itself is not a common one; I’m not even sure if anybody else uses it. So, please let me know if you’re familiar with the concept and know a better name for it. After all, the whole Generative AI space is still very new, and we’re still figuring things out.

So what is it really?

Fluid prompt is the one that, on the one hand, is stable (minimizes chances of artifacts) and, on the other hand, is open-ended. It gives the model enough freedom to express itself by avoiding strict instructions on what to depict.

But what is the purpose of a prompt that does not describe an image? There are several interesting use cases:

- Model bias detection

- A stable environment for experiments with different words

- A starting point for building more complex prompts

In this post, we’ll focus on the first one—model bias detection. It is probably the easiest aspect of fluid prompts to grasp and will serve as a good starting point for understanding the concept better. I hope to cover the other use cases in separate posts later (and once I do, I’ll add the links here).

Let’s dive in.

Model bias detection.

When you experiment with a new model, it is a good idea to check if it is biased towards a certain type of images. The best way to do this is to use a prompt that does not describe the image’s subject but instead forces the image quality and a certain direction.

Let’s take a look at a couple of fluid prompts.

1

detailed PNG

It is probably the simplest one I use. But because of its simplicity, it is the most flexible one. I often use it first on a new model. Do not expect to get a quality image from it; that’s not the purpose of a fluid prompt. But it will show you the strongest biases of the model. It will also reveal the problems with the model, if any. Such as overtraining or “frying” because of some merging methods (a separate significant topic, so I will not dive into it now).

1

A captivating bold фото.

This one is more complex and has its quirks. Let’s break it apart to understand how each word affects the image.

A- Yes, we will discuss an article usage =). Empirically, it makes the image more stable.

captivating- This one has several effects. It improves image quality and composition. It is also biased toward depicting a human, often with a closer face.

bold- This one is optional but works as a general features multiplier. It helps reveal more subtle biases and make them more prominent.

фото- That’s an interesting one. It is one of only two russian words represented in the CLIP vocabulary as a single tag. I found that it has a much stronger meaning

a photo of a human, than an Englishphoto, producing better depictions of humans. At least with Stable Diffusion 1.5-based models that I’ve tested. As a negative side effect, it often leads to black-and-white images (because of many old photos in a training dataset). But that’s not important for our purposes. .- Guess what? It is a dot. And it is as important as an article. The purpose is the same - overall stabilization.

This knowledge of word effects is the result of numerous experiments with synonyms and their placement. But it is not a dogma. I constantly experiment with new words and find combinations that work better or have useful effects.

Now let’s see how these prompts work in practice. For a start, we’ll generate twelve images using some popular checkpoints: DreamShaper v8 and CyberRealistic v6.

The negative prompt is the following:

1

naked, bairn

naked- Self-explanatory.

bairn- A child. But more subtle than the

child. It helps to control the age but does not reduce cuteness that much.

Note on negative prompt: Usually, I do not use negative prompts in experiments because I want to reveal potential problems with the model. But in this case, I will make an exception only because I need SFW images to share. In actual experiments, you would not use negative prompts because if the model is biased toward NSFW images, I bet you want to be aware of it.

Other parameters:

seed: 42steps: 25CFG scale: 11.6sampler: Euler aIgnore CFG scale during early sampling: 0.16width: 576height: 768

Let’s start with the first model.

DreamShaper v8 analysis

First, we’ll generate 12 images using the detailed PNG prompt. It’s useful to use the same starting seed in all your experiments, so you’ll eventually become familiar with the usual shapes produced from the same latent noise.

DreamShaper v8 12 images with “detailed PNG” prompt

First step - let’s separate the effects of the used words.

detailed- Leads to more features and tiny details. In such a short prompt, it is too powerful, leading to vivid, sometimes overwhelming, details.

PNG- Often produces blank backgrounds. Radiant colors. Sharp edges. Illustration style.

Now, once we know the effects of the words, let’s analyze the model biases.

- Every image depicts a human, which is a bias. However, we have a variety of ages and genders, which is a sign of a well-balanced model.

- A clear bias towards the race.

- There are no signs of any overtraining or frying.

- If you generated the same set without a negative prompt, you would find a bias towards naked figures. It is manageable, but you should be aware of it.

That’s pretty much all we can learn from this simple prompt. Let’s move on to the next one. Again, the same set of images but with a a captivating bold фото. prompt.

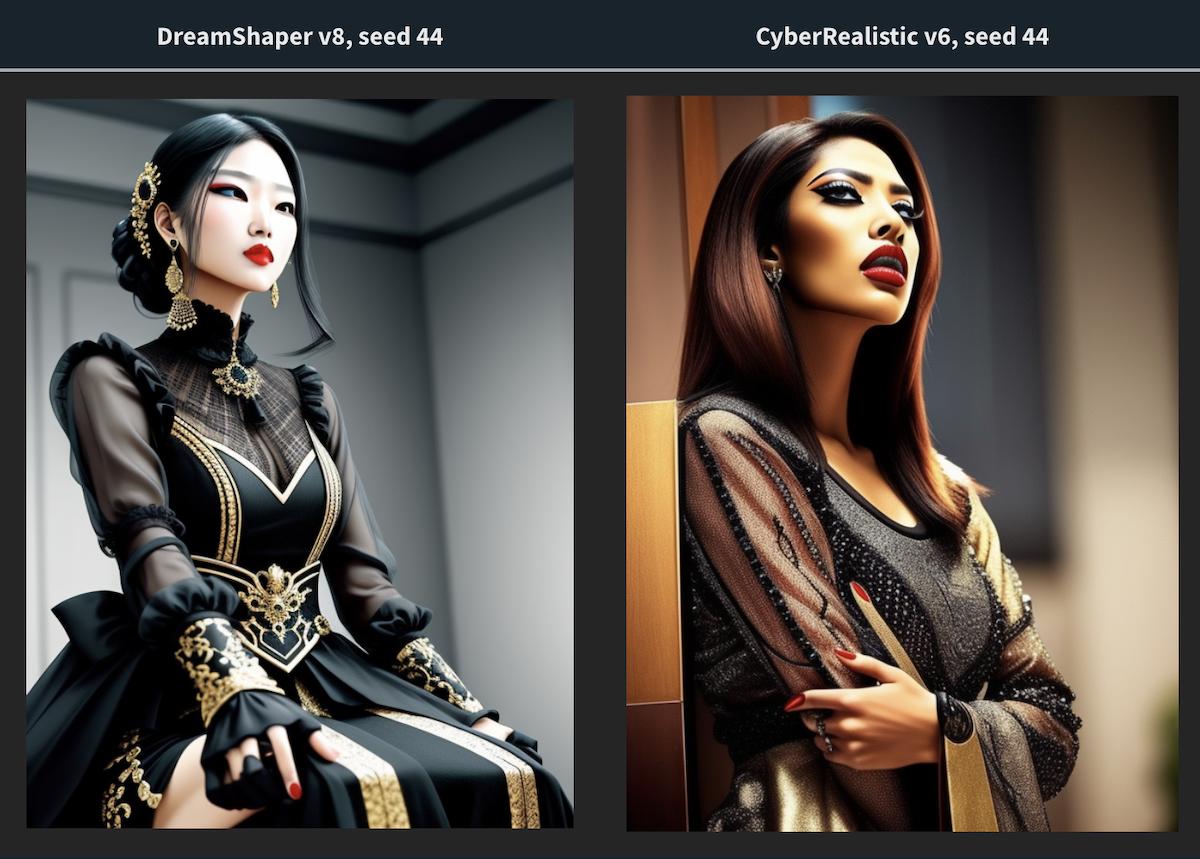

DreamShaper v8 12 images with “a captivating bold фото.” prompt

This prompt is designed to generate portraits, and it does a great job at it. However, the prompt itself has a slight bias towards females. Keep that in mind when you analyze the results.

- The race bias is much more prominent.

- You would see even stronger NSFW bias without a negative prompt.

- We found a less obvious bias towards gender. There are females only.

Conclusion: The DreamShaper v8 model is well built but biased towards Asian females. If you tested it with more variants of the fluid prompt, you would also find that the image style tends to lean towards illustration and 2.5D anime. But we don’t see it here because both

PNGandфотоwords in the prompt have powerful effects on the style.

CyberRealistic v6 analysis

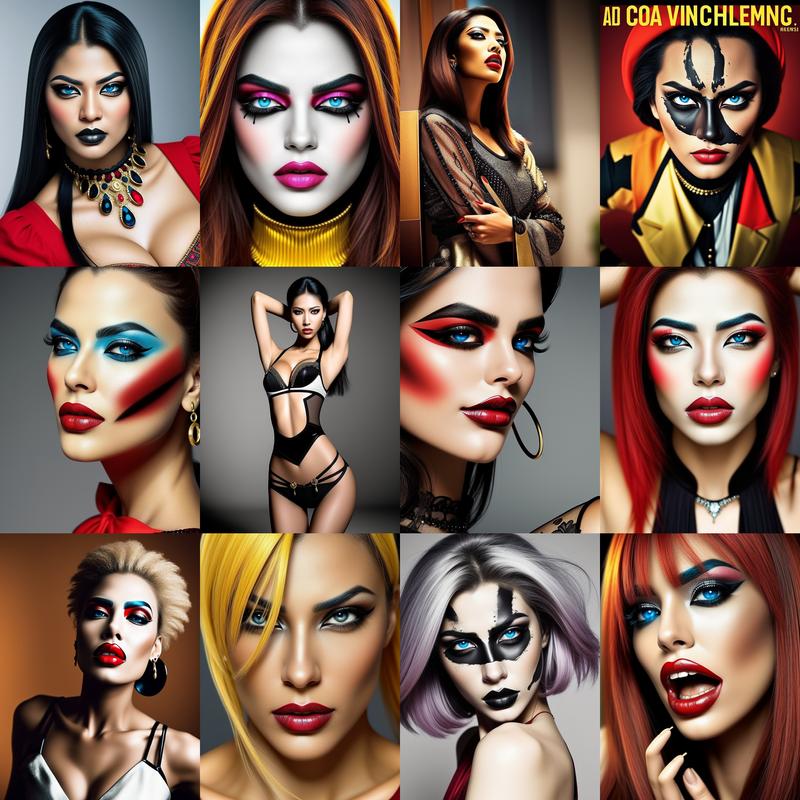

CyberRealistic v6 12 images with “detailed PNG” prompt

Once we have the same images generated with DreamShaper v8, it is also useful to compare the same pictures from different models to see the effect of the seed (initial latent noise) and the effect of the model itself.

Let’s look at the most different images with the same seed.

This comparison shows that the CyberRealistic v6 model produces better facial features and more realistic skin, hair, and fabric textures.

This pair shows that the CyberRealistic v6 model is less biased towards humans and strongly biased towards intricate realistic details.

In this case, we see that the CyberRealistic v6 model has a more substantial bias towards close-up portraits.

Now, let’s see how the a captivating bold фото. prompt works with this model. That’s where it would shine.

CyberRealistic v6 12 images with “a captivating bold фото.” prompt

Let’s compare the results with the DreamShaper v8 model. As I mentioned before, the best pairs are the most different.

This pair reveals a strong bias towards race and gender again. But this time, it is a Caucasian female.

The bias towards the close-up portraits is especially strong here.

Conclusion: the CyberRealistic v6 model is the beast when it comes to realism and portraiture. It is also well-balanced and doesn’t show signs of overtraining.

Key takeaways from fluid prompt analysis

Using just two simple fluid prompts, we were able to reveal several important characteristics of both models:

- Inherent Biases

- DreamShaper v8 shows a bias towards Asian females and illustration-style images

- CyberRealistic v6 leans towards Caucasian females and close-up portraits

- Technical Strengths

- DreamShaper v8 excels at balanced, detailed compositions with anime-influenced aesthetics

- CyberRealistic v6 demonstrates superior realism, especially in facial features and textures

- Model Health

- Neither model showed signs of overtraining or “frying”

- Both models demonstrated consistent, stable outputs across different seeds

This exercise demonstrates why fluid prompts are invaluable for model evaluation. By using minimal, open-ended prompts, we can:

- Quickly identify a model’s default tendencies and biases

- Assess technical quality and consistency

- Understand a model’s strengths and limitations before investing time in complex prompts

These insights help us make informed decisions about which model to use for specific projects and how to compensate for any inherent biases in our prompts.